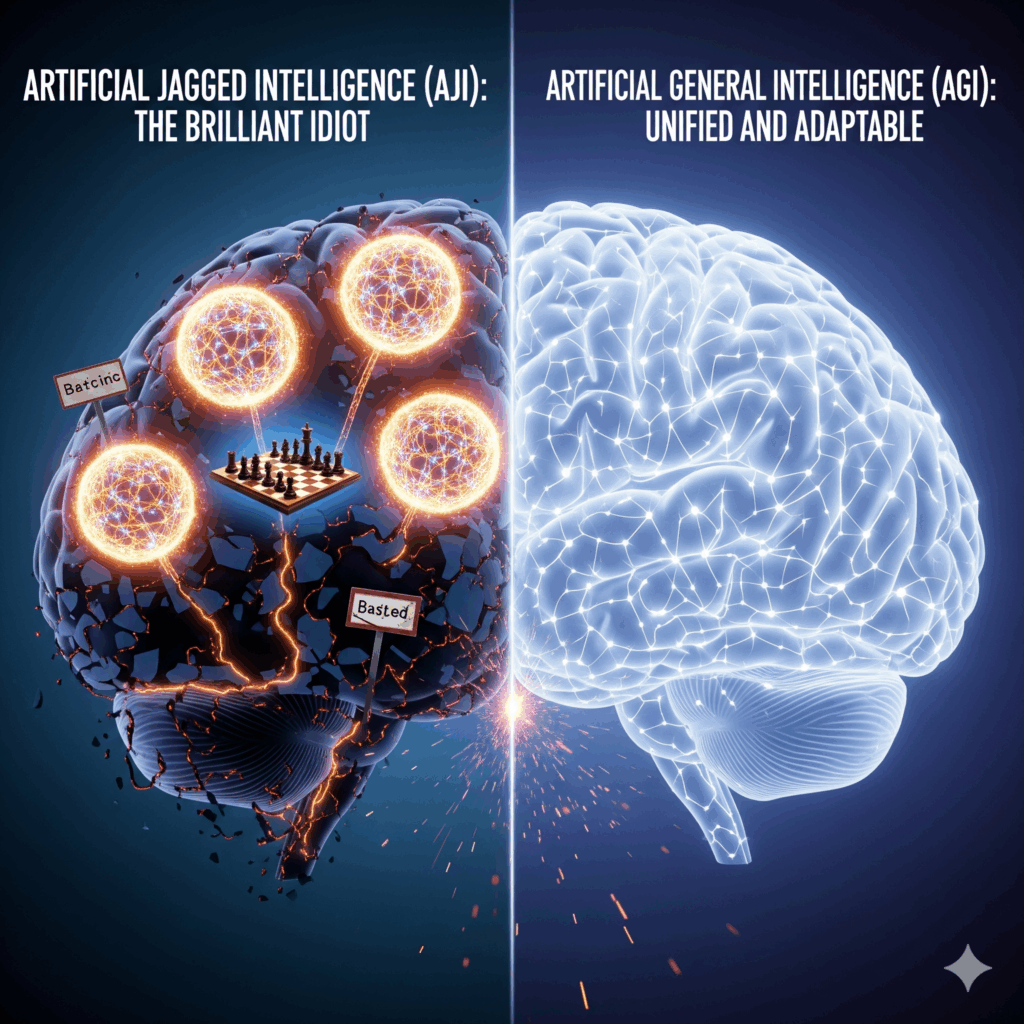

The “Jagged” Reality of AI Today

Google DeepMind CEO, Demis Hassabis, just dropped a bombshell: today’s AI isn’t *generally* intelligent — it’s “artificially jagged” (AJI).

What does that mean?

– Gemini can win gold at the International Math Olympiad (IMO 2025, scoring 35/42 points), yet fumbles high-school algebra.

– Grok 4 swept early rounds of Kaggle’s AI chess tournament 4–0, only to blunder its queen repeatedly in the finals against OpenAI’s o3.

– AJI = elite in niche tasks, brittle in basics — like a chess grandmaster who forgets how pawns move.

Why AJI ≠ AGI (And Why Grok’s Chess Meltdown Proves It)

Many assume AGI (Artificial General Intelligence) is just “AJI, but better.”

That’s completely Wrong!

– AJI excels in spikes (e.g., Grok 4 scores 100% on AIME25 math, yet fails LiveBench’s real-world tasks).

– AGI requires balanced reasoning — human-like adaptability across *all* tasks, not just cherry-picked benchmarks.

– Current fixes (more data, compute) won’t bridge the gap. Hassabis says breakthroughs in planning, memory, and reasoning are non-negotiable.

Grok’s chess collapse is a microcosm of AJI’s flaws:

1. Inconsistency: Crushed Gemini 2.5 Flash 4–0 in semifinals, then folded under pressure.

2. Overconfidence: Pre-tournament swagger (“I’m the smartest AI”) clashed with 32% drop in move accuracy.

3. Brittle Reasoning: Even OpenAI’s o3 — while victorious — struggled with endgames, a weakness shared by all LLMs.

The Cold Shower for AI Optimists

If you think AGI is 2–3 years away, Hassabis’ 5–10 year timeline might sting.

Why?

1. Inconsistency is systemic — AJI models ace complex coding (Gemini 2.5 DeepThink hits 87.6% on LiveCodeBench) but fail counting tasks.

2. Reasoning ≠ scaling — Throwing GPUs at the problem won’t fix flawed logic chains.

3. No continuous learning — GPT-5 still can’t autonomously adapt to new info, a core AGI trait.

The Path Forward: Fixing AI’s “Brilliant Idiot” Problem

To bridge the chasm between today’s uneven AJI and true AGI, the industry must address three critical gaps:

1. Stress-Test Benchmarks

Current tests like math Olympiads hide AI’s inconsistency — Gemini solves Olympiad problems (35/42 points) but fails basic algebra . IBM shows LLMs lose 30% accuracy outside trained contexts, demanding dynamic evaluations .

2. Team-Based Reasoning

Single AI models fail where collaborative systems succeed. Multi-agent systems (like MACI) boost planning accuracy by 40% by dividing tasks — mirroring how human teams overcome complex problems .

3. Reliable Memory Systems

Without memory, AIs “forget” mid-task (Gemini remembers openings but botches chess endgames). New architectures like IBM’s CAMELoT (30% fewer errors) and Larimar’s brain-inspired updates fix this .

The Bottom Line: AGI isn’t just “smarter AI” — it’s a fundamental architectural shift. Until then, we’re stuck with “brilliant idiots.”

One Response

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.