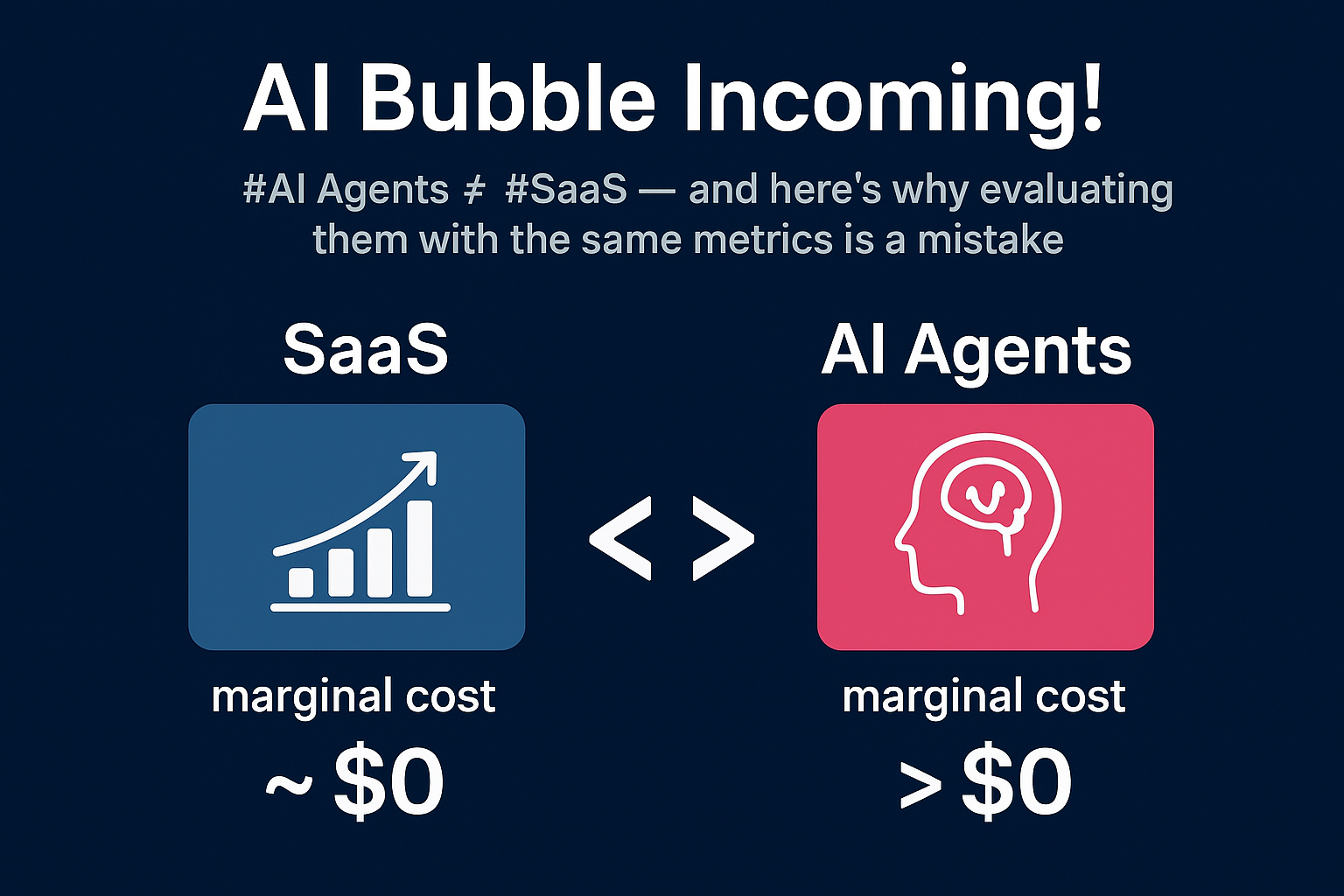

#AI Agents ≠ #SaaS — and here’s why evaluating them with the same metrics is a mistake.

Too many AI agent startups — and even their investors — are defaulting to the classic SaaS growth playbook: scale rapidly, drive ARR, raise on momentum. But what’s often overlooked is the underlying unit economics. Unlike SaaS, where marginal costs approach zero, AI-native products carry ongoing token and compute costs per user action — fundamentally altering their scalability and profitability models.

SaaS marginal cost ~ $0

Once infra is set up, adding an extra user barely costs anything.

AI Agents marginal cost > $0

Every single user action burns tokens, GPU compute, and energy — costs that don’t trend to zero even at scale.

Here’s why this matters deeply:

RoI Calculus Shifts

Traditional SaaS metrics like LTV:CAC are incomplete for AI agents. You now need to factor in:

a) Average tokens consumed per user action

b) Effective token pricing after batching, caching, quantization

c) Model orchestration strategies (e.g. routing lightweight tasks like social media captions to cost-efficient models like o4-mini, while assigning complex, long-form content generation to more powerful models like Claude Sonnet 4 — AI platforms can optimize both quality and token costs.)

d) Infra hosting vs API usage breakeven points

Gross Margins are Tied to User Behavior

In SaaS, power users are great — they increase #LTV with minimal cost impact. In AI agents, heavy users directly eat into your margins unless pricing scales with usage. The more your product is used, the more it costs you — a paradox that defeats traditional SaaS logic.

For example:

Claude Sonnet 4 output tokens list price is $15/M tokens, roughly ~$1 for ~65k words.

If your AI agent outputs large structured summaries, code, or customer service responses for hundreds of daily users, costs balloon unless each task drives value far exceeding token burn.

Pricing Models Need Rethinking

Usage-based pricing aligns cost with value but creates user anxiety. Flat-rate pricing risks negative margins if usage is unbounded. No one has yet cracked a truly scalable, AI-native pricing model that balances user RoI with vendor profitability.

Strategic Moats are Different

In SaaS, moats relied on workflow lock-in, data, and integrations. In AI agents, moats come from:

a) Access to proprietary LLMs

b) On-device inference to lower token costs

c) Efficient RAG that minimizes prompt and output length

d) Using aggregated usage data to fine-tune smaller, task-specific models for better efficiency

Bottom line: AI agent startups need a Tokenomics-Adjusted RoI Model

1)What is the per task economic value vs token cost?

2)How will model orchestration evolve to sustain margins?

3)Can your pricing model balance adoption with profitability?

4) Where is your true moat — proprietary data, distribution, optimized models, or infra ownership?

This is where real business model innovation in AI will happen. 🚀